Approach

We believe transparency & fairness are key in making lossy compression comparisons. In comparing SVT-AV1-PSY to other image compression algorithms, we strived to ensure each encoder was represented according its best-scoring implementation. We consulted with the developer of a perceptual fidelity-focused aomenc fork called aom-av1-psy101 to benchmark aomenc at its maximum efficiency. We allowed cjxl to use the maximum effort level available, despite this causing it to encode significantly slower than the other encoders we tested. We also gave aomenc a speed advantage over our encoder. For JPEG, we used the best JPEG encoder available for perceptual fidelity, Google's jpegli.

Configuration

For our testing, we used a fork of libavif properly supporting SVT-AV1-PSY and

aom-av1-psy101. Our comparisons are up to date as of 21 October 2024

when benchmarking was performed on SVT-AV1-PSY commit ca7c032. The encode settings used for each encoder are as

follows:

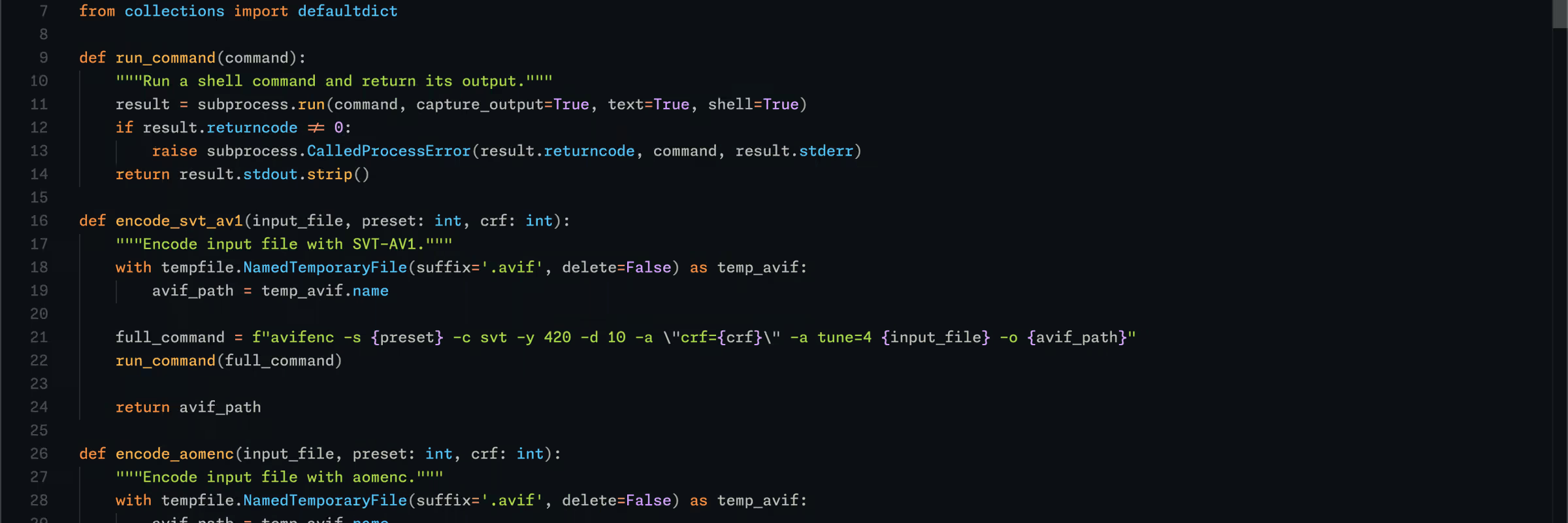

-

SVT-AV1-PSY:

avifenc -s 4 -c svt -y 420 -d 10 -a crf=XX -a lp=5 -a tune=4 input -o output.avif -

aomenc:

avifenc -j 8 -d 10 -y 444 -s 4 --min 0 --max 63 --minalpha 0 --maxalpha 63 -a end-usage=q -a cq-level=XX -a tune=ssim -a quant-b-adapt=1 -a deltaq-mode=2 -a sb-size=64 -a sharpness=1 input -o output.avif -

cjxl:

cjxl input output.jxl -q XX -e 10 -

cjpegli:

cjpegli input output.jpg -q XX -p 2

If you'd like more information, you can see our script that we use to plot efficiency for each encoder.

Datasets

In order to provide valuable compression efficiency numbers for a wide variety of different image content, we hand-picked two compelling datasets for our testing.

- CID22 Validation Set: The reference images in Cloudinary's validation dataset were used as our primary resource during testing. It is a subset of the Cloudinary Image Dataset '22 (CID22) which is described as "... a large image quality assessment (IQA) dataset created in 2022, consisting of 22k annotated images based on 250 pristine images ..." (Cloudinary, 2022).

- gb82 Dataset: The gb82 Dataset (direct download) was used to validate our results on a dataset consisting entirely of photographic content. 25 photographic images were hand-picked to represent a breadth of photographic content to ensure encoders cannot overfit by only minimizing certain classes of artifacts.

- Daala subset1: The Daala subset1 dataset contains a variety of larger photographic images, and was used to validate our results on a dataset representative of higher-quality photographic content that one might serve on a webpage.

The graphs presented on the AVIF page are real charts generated from these two datasets based on the encoding settings we provided in the section above.

Visual Comparisons

Stay tuned - these are coming soon!